MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Descrição

Solved I. Solve the following utility maximization problem

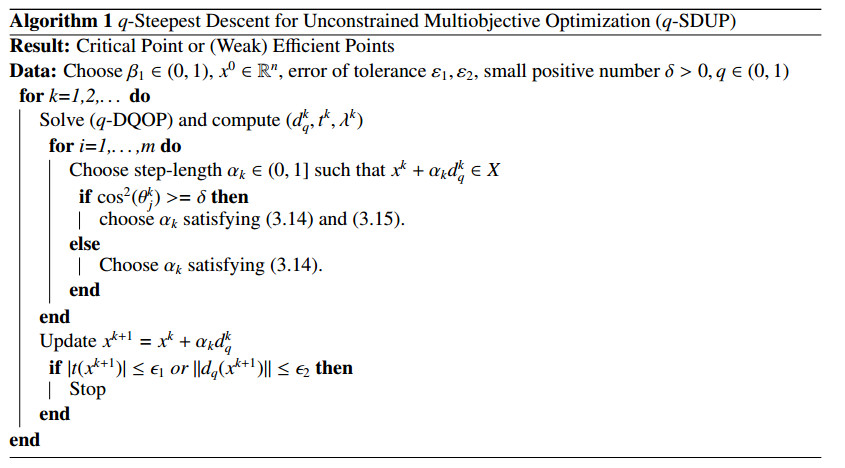

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

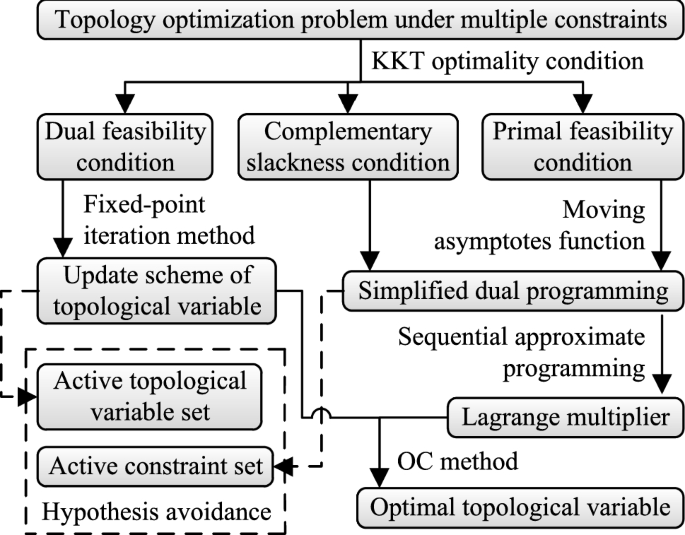

An optimality criteria method hybridized with dual programming for topology optimization under multiple constraints by moving asymptotes approximation

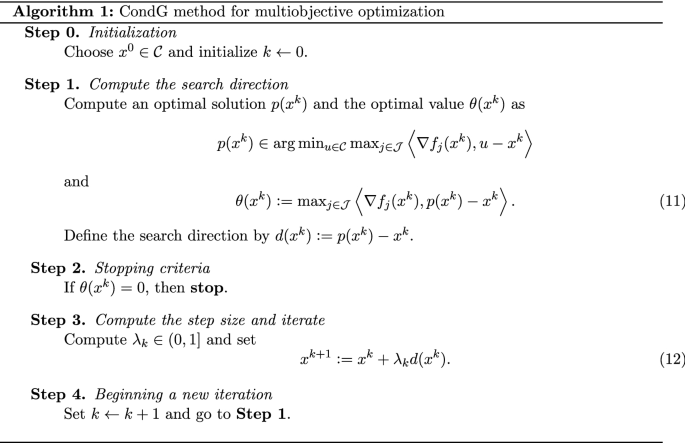

Conditional gradient method for multiobjective optimization

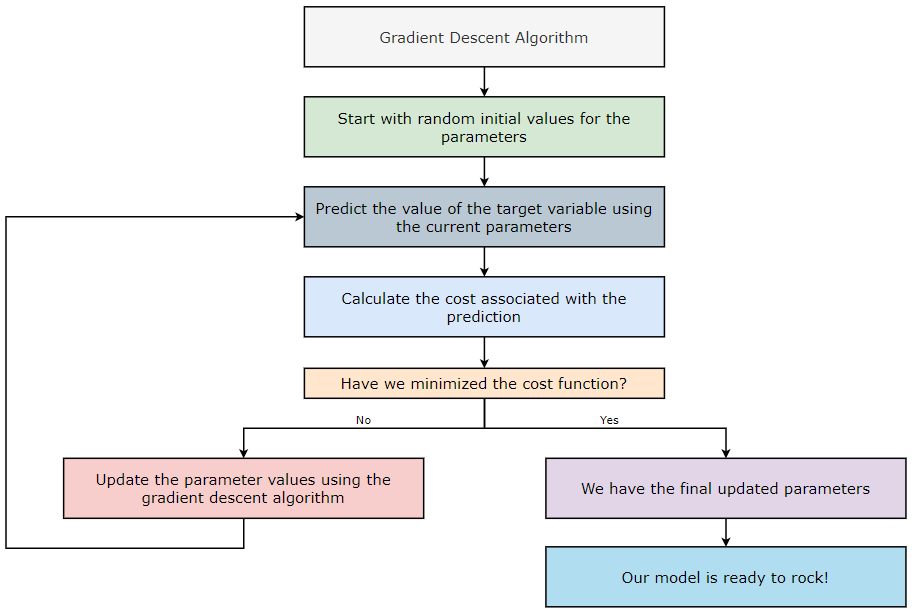

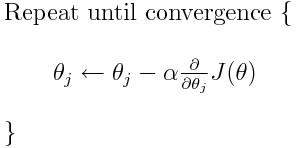

GRADIENT DESCENT Gradient descent is an iterative optimization algorithm used to find local minima…, by Kucharlapatiaparna

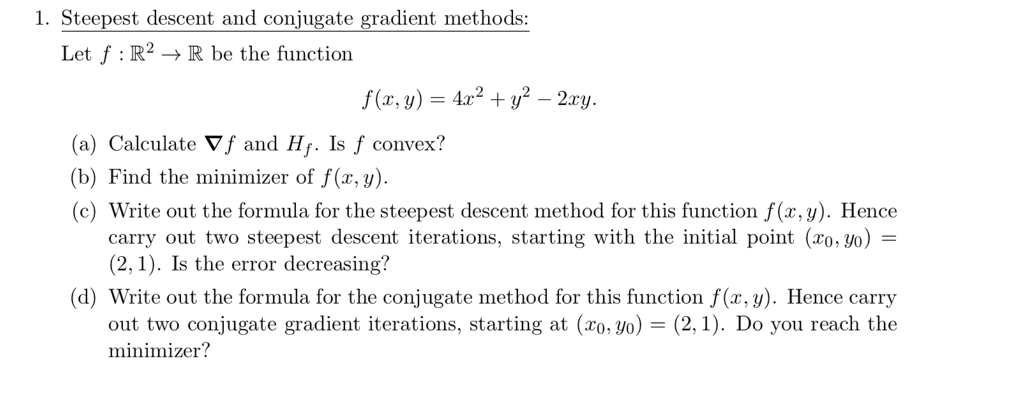

Solved] . 4. Gradient descent is a first—order iterative optimisation

The Gradient Descent Algorithm – Towards AI

Can gradient descent be used to find minima and maxima of functions? If not, then why not? - Quora

proof explanation - How to get this inequality in Gradient Descent algorithm? - Mathematics Stack Exchange

Gradient Descent Algorithm

Gradient Descent algorithm showing minimization of cost function

Solved] . 4. Gradient descent is a first—order iterative optimisation

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

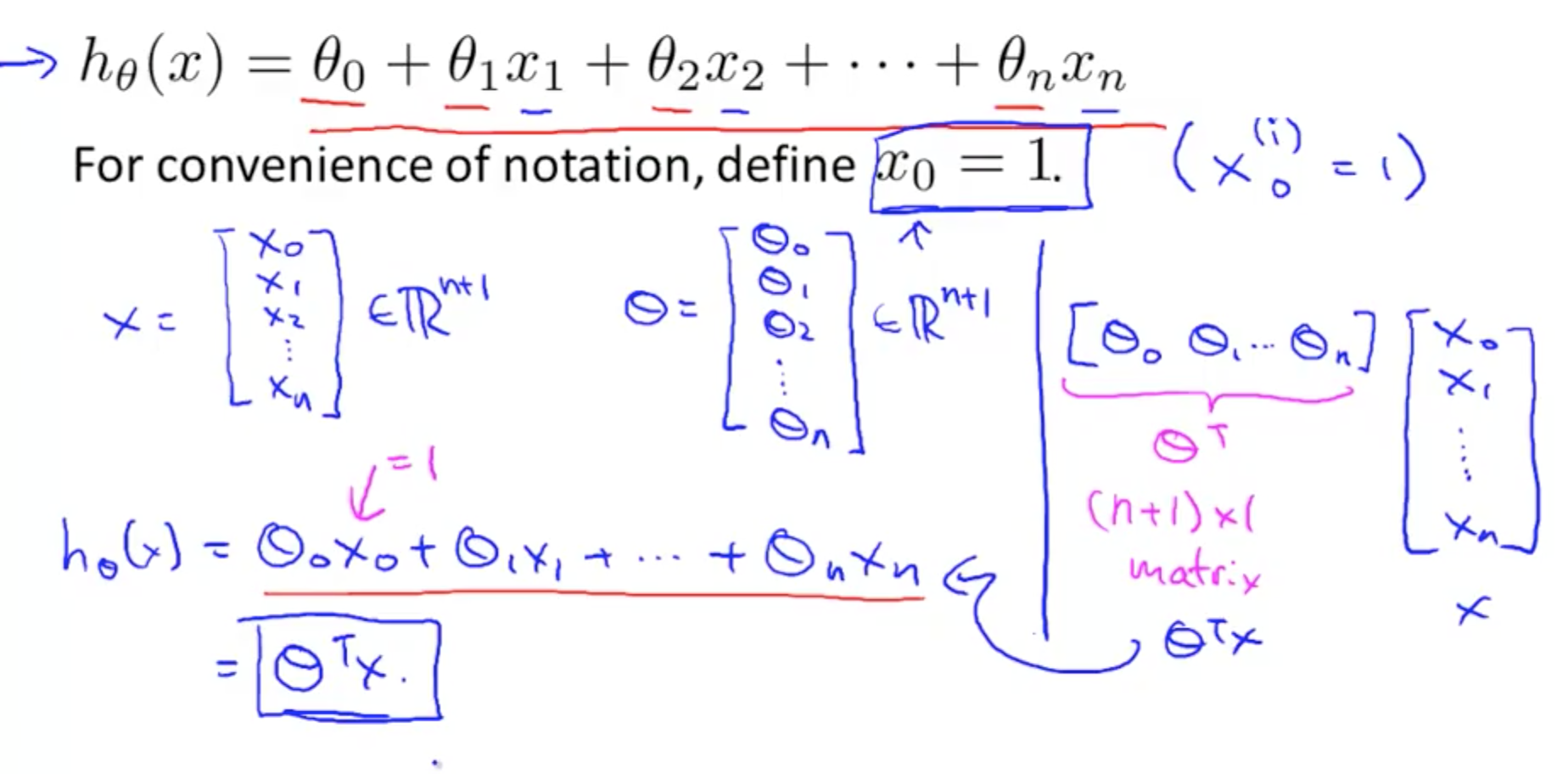

Linear Regression with Multiple Variables Machine Learning, Deep Learning, and Computer Vision