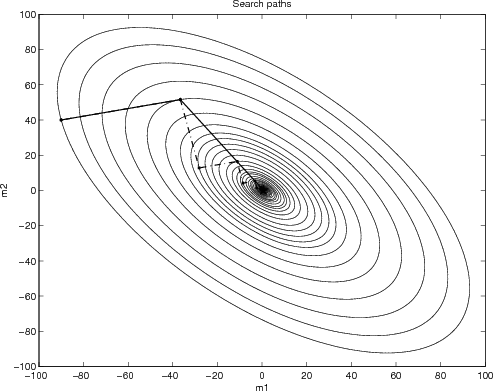

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange

Por um escritor misterioso

Descrição

I have a function,

$$f(\mathbf{x})=x_1^2+4x_2^2-4x_1-8x_2,$$

which can also be expressed as

$$f(\mathbf{x})=(x_1-2)^2+4(x_2-1)^2-8.$$

I've deduced the minimizer $\mathbf{x^*}$ as $(2,1)$ with $f^*

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange

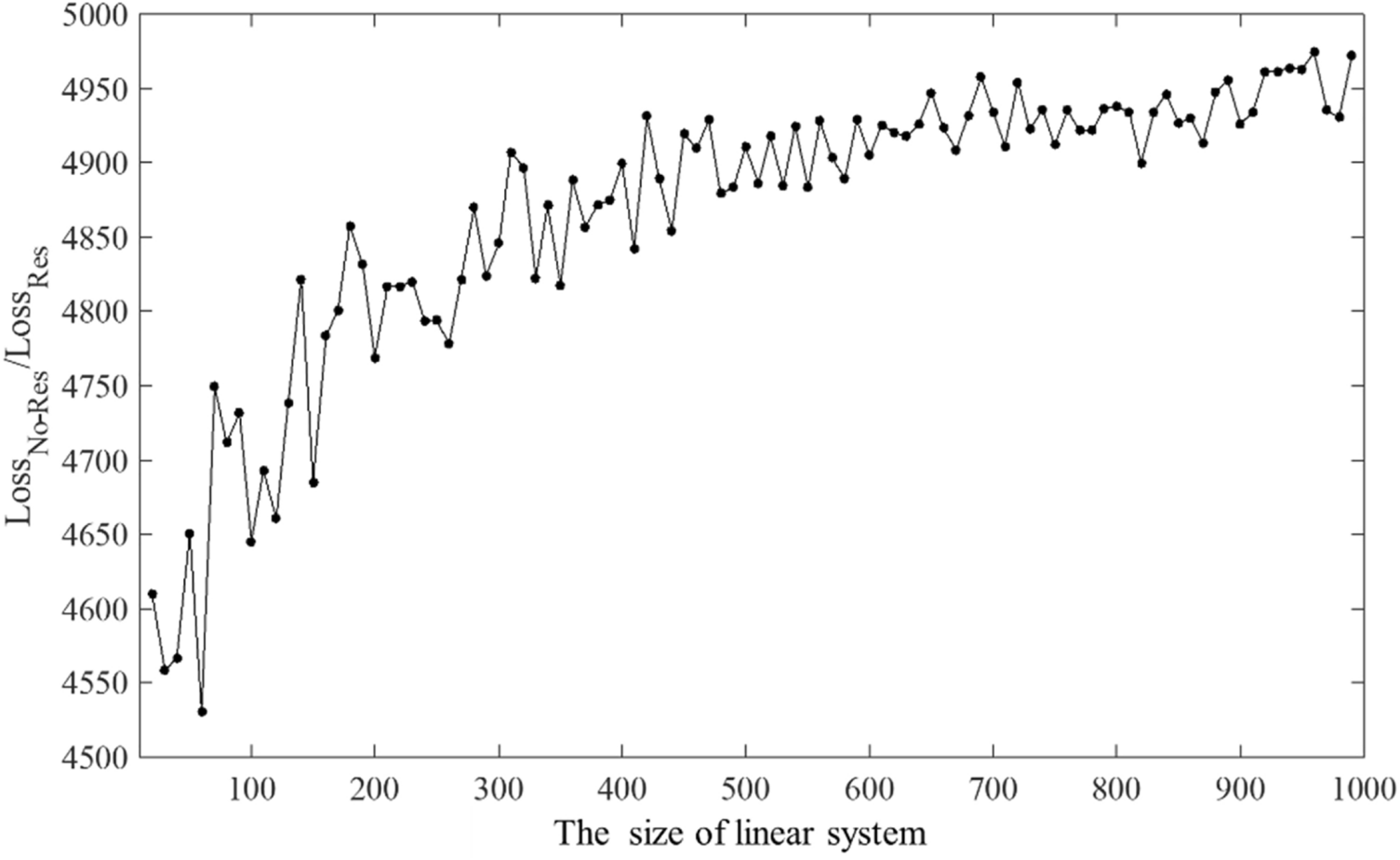

A neural network-based PDE solving algorithm with high precision

Comparing Number of Iterations Between Different Optimization Algorithms - Operations Research Stack Exchange

Steepest Descent - an overview

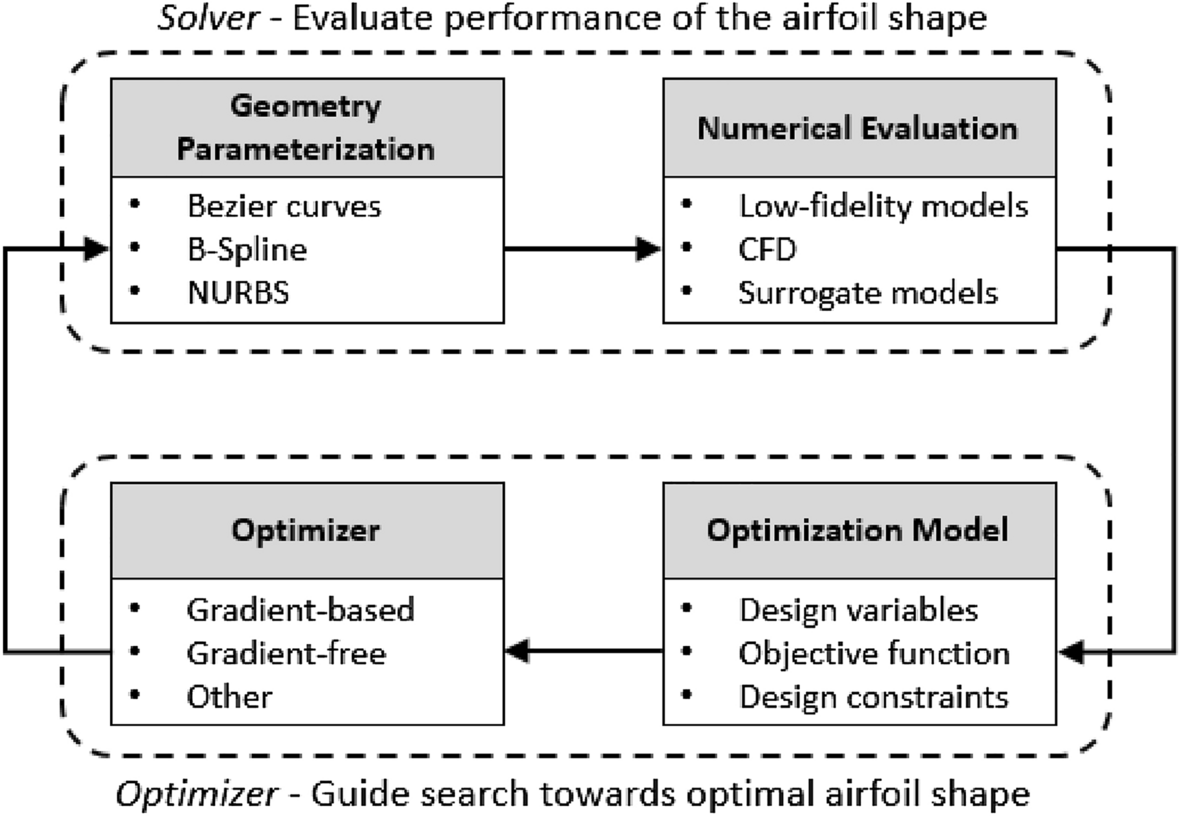

A reinforcement learning approach to airfoil shape optimization

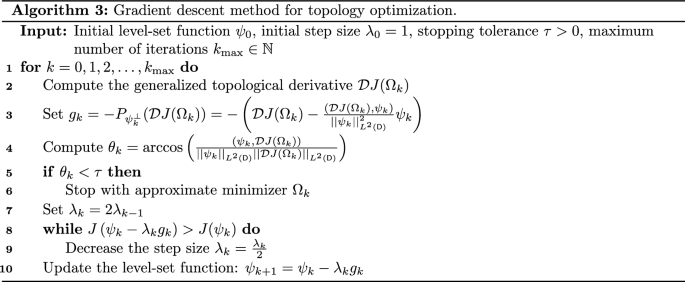

Quasi-Newton methods for topology optimization using a level-set method

python - Steepest Descent Trace Behavior - Stack Overflow

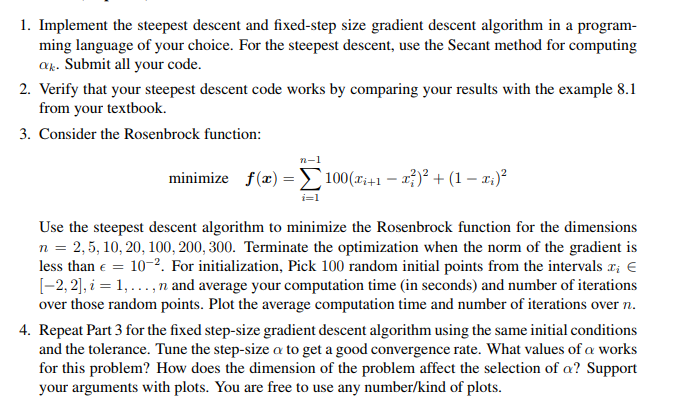

Solved 1. Implement the steepest descent and fixed-step size

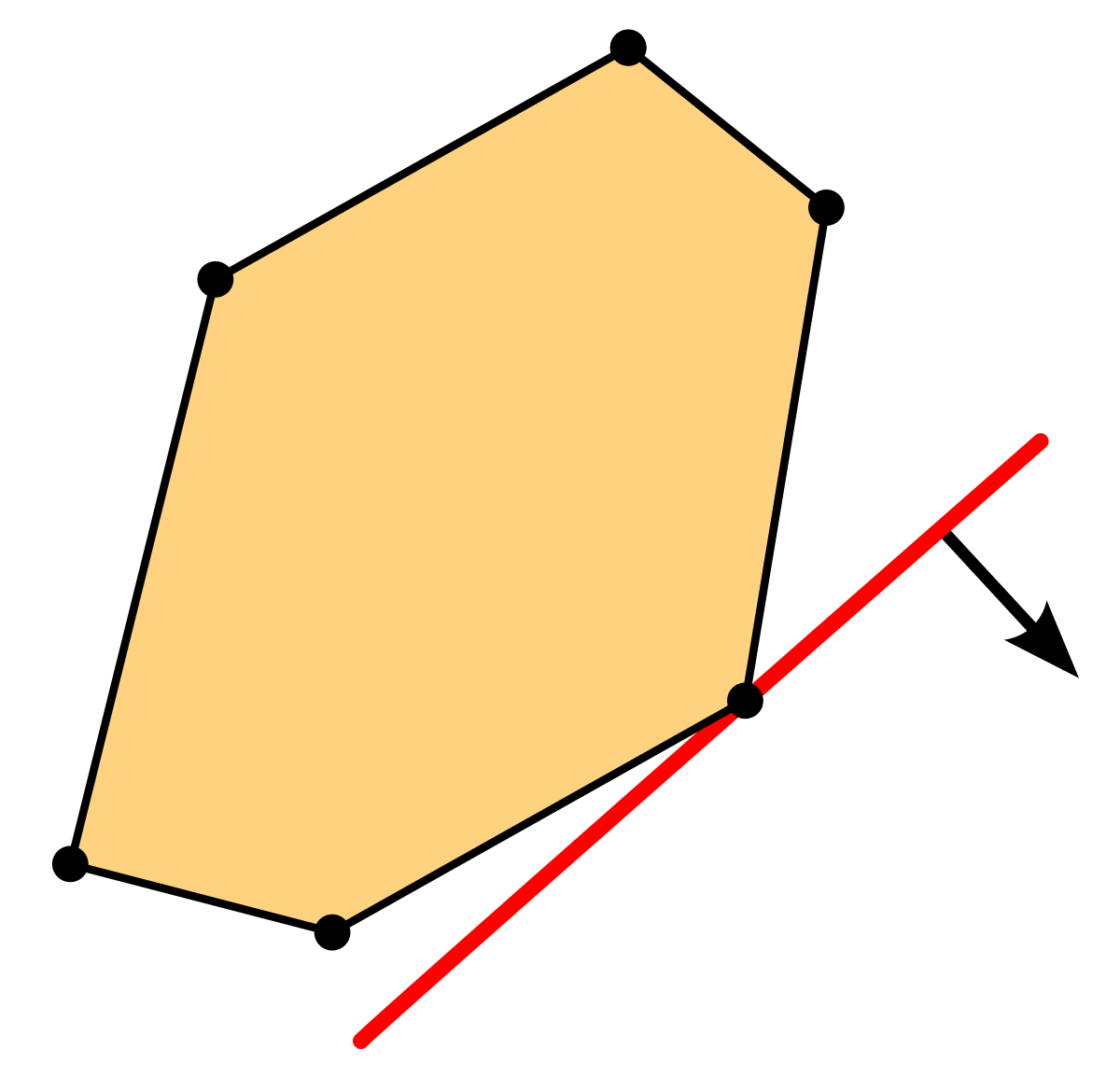

Linear programming - Wikipedia

ordinary differential equations - Finite-time criterion for ODE - Mathematics Stack Exchange

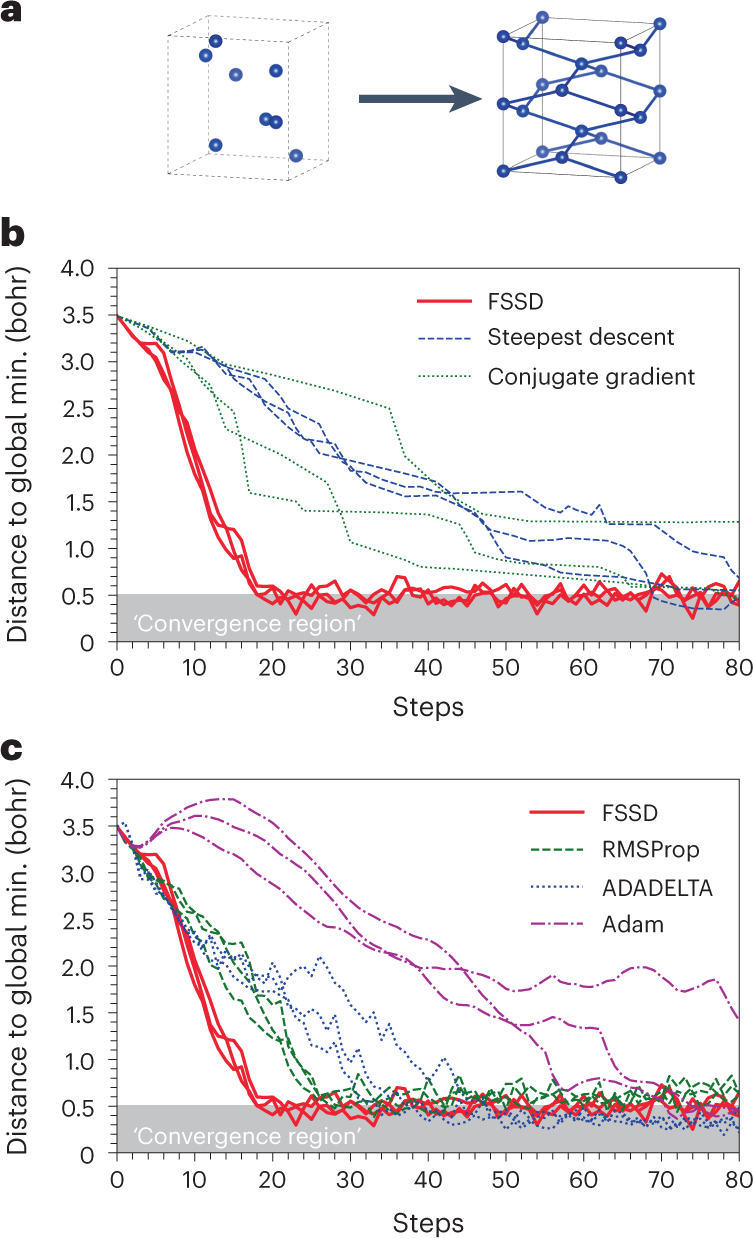

A structural optimization algorithm with stochastic forces and stresses

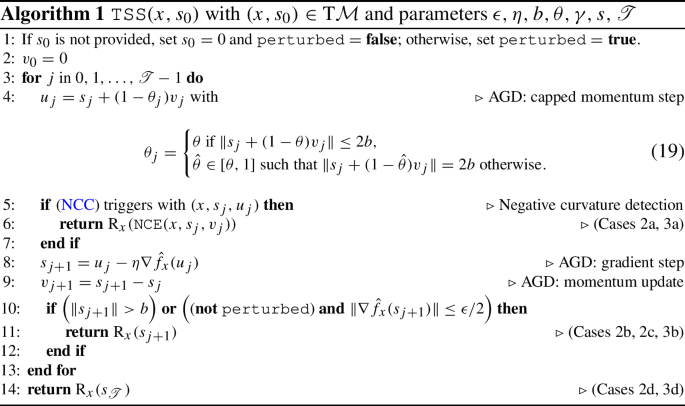

An Accelerated First-Order Method for Non-convex Optimization on Manifolds

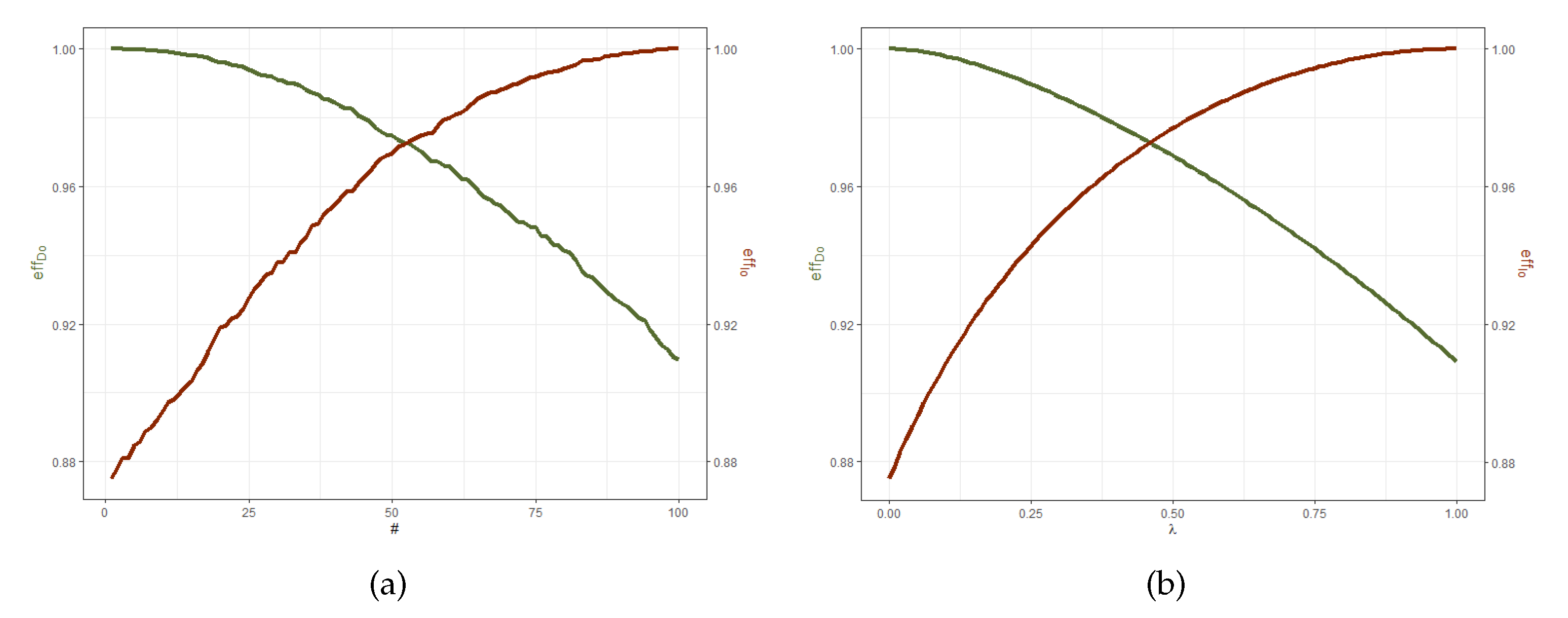

Mathematics, Free Full-Text

Mathematics, Free Full-Text

machine learning - Does gradient descent always converge to an optimum? - Data Science Stack Exchange