Supercharging AI Video and AI Inference Performance with NVIDIA L4 GPUs

Por um escritor misterioso

Descrição

NVIDIA T4 was introduced 4 years ago as a universal GPU for use in mainstream servers. T4 GPUs achieved widespread adoption and are now the highest-volume NVIDIA data center GPU. T4 GPUs were deployed…

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on

AWS and NVIDIA Announce Strategic Collaboration to Offer New

Supercharging AI Video and AI Inference Performance with NVIDIA L4

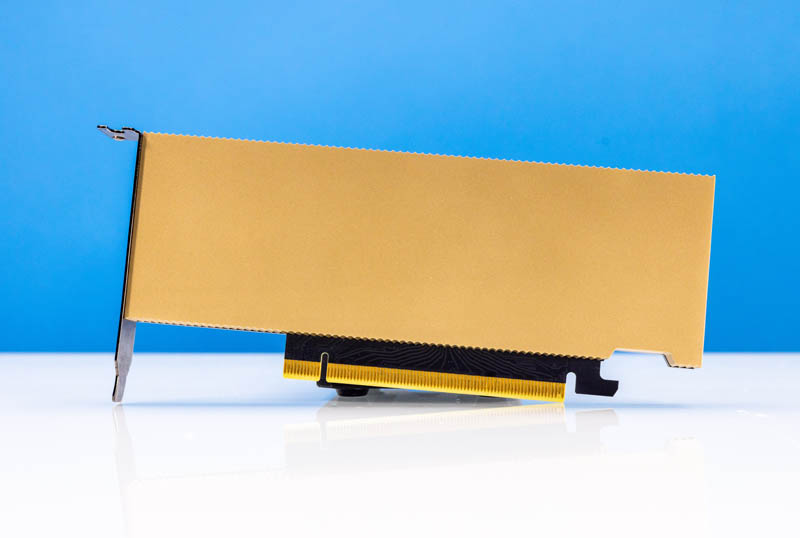

NVIDIA L4 24GB Review The Versatile AI Inference Card

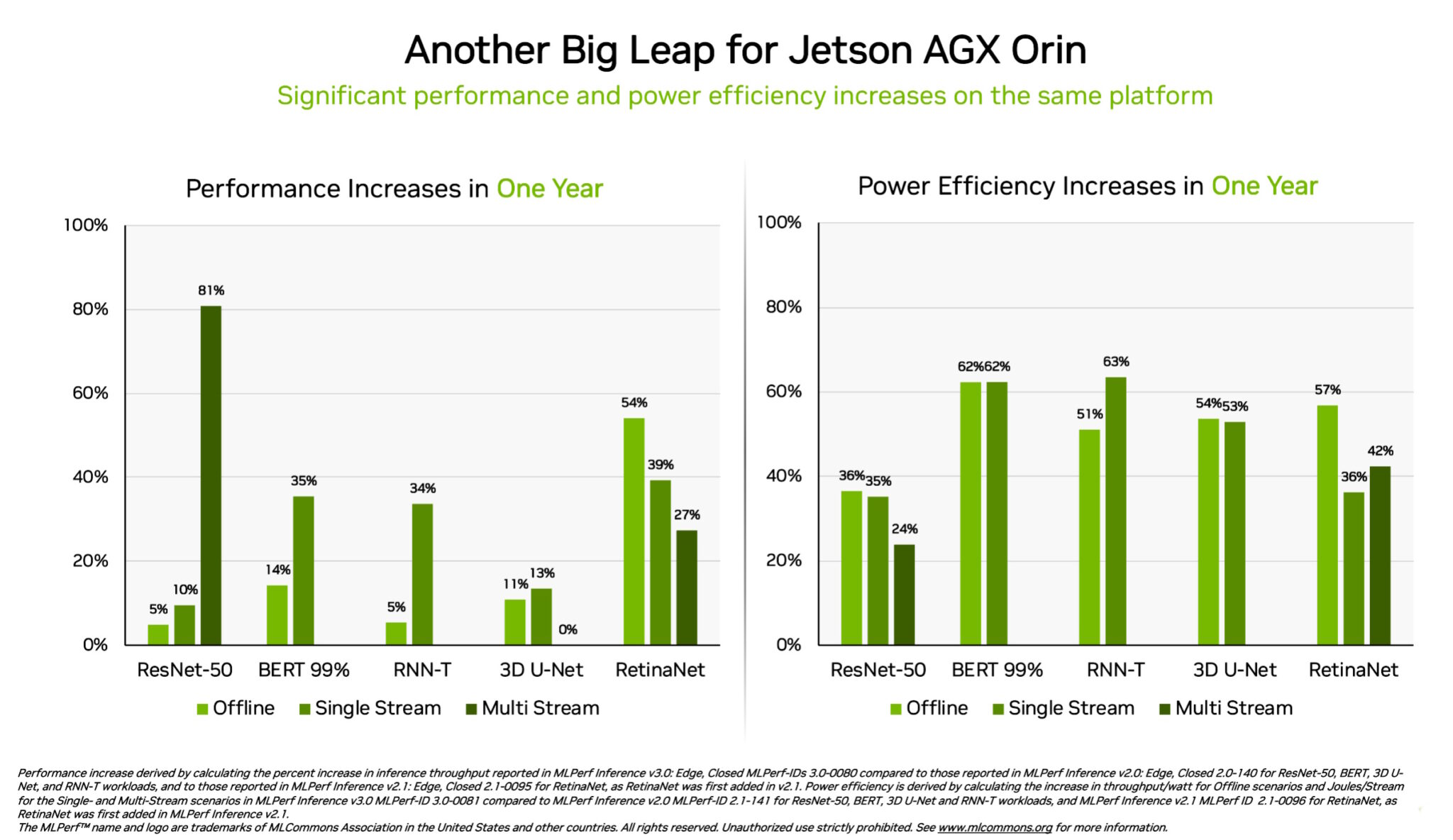

NVIDIA Hopper H100 & L4 Ada GPUs Achieve Record-Breaking

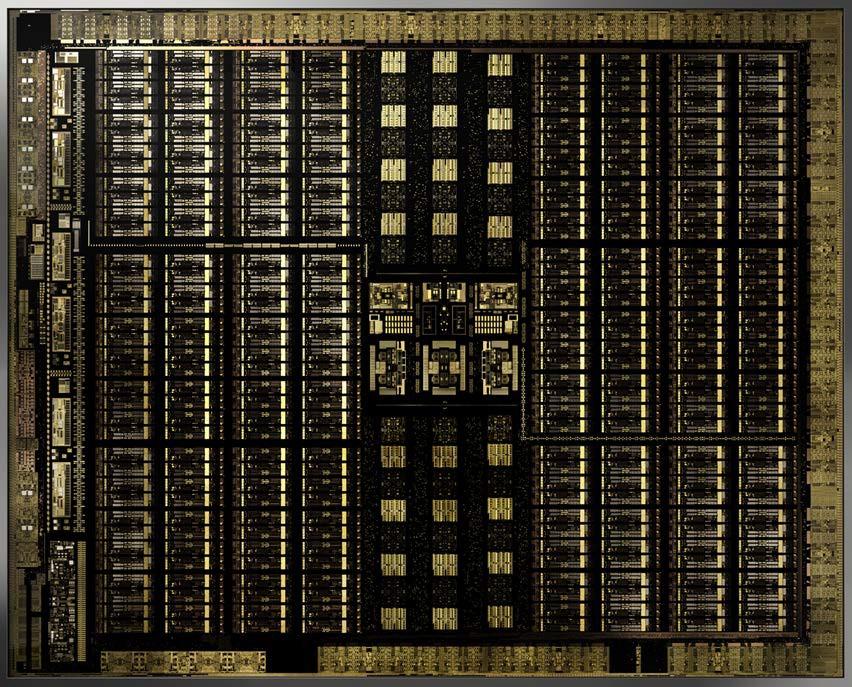

NVIDIA Turing Architecture In-Depth

H100, L4 and Orin Raise the Bar for Inference in MLPerf

Connect With the Experts—Omniverse Enterprise

L40S GPU for AI and Graphics Performance

Supercharging AI Video and AI Inference Performance with NVIDIA L4

NVIDIA-Certified Next-Generation Computing Platforms for AI, Video

Choosing a Server for Deep Learning Inference

Supercharging AI Video and AI Inference Performance with NVIDIA L4

Creating Smarter, Safer Airports with AI

NVIDIA Smashes Performance Records on AI Inference