RuntimeError: CUDA out of memory. Tried to allocate - Can I solve this problem? - windows - PyTorch Forums

Por um escritor misterioso

Descrição

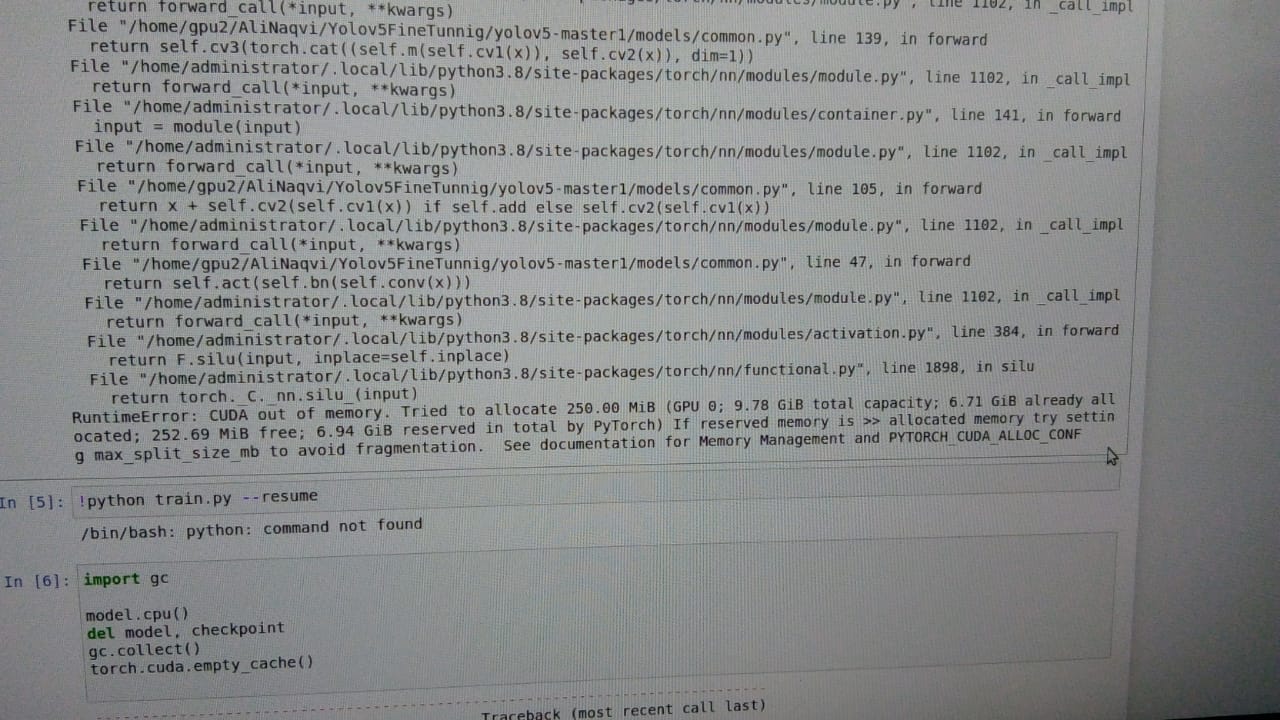

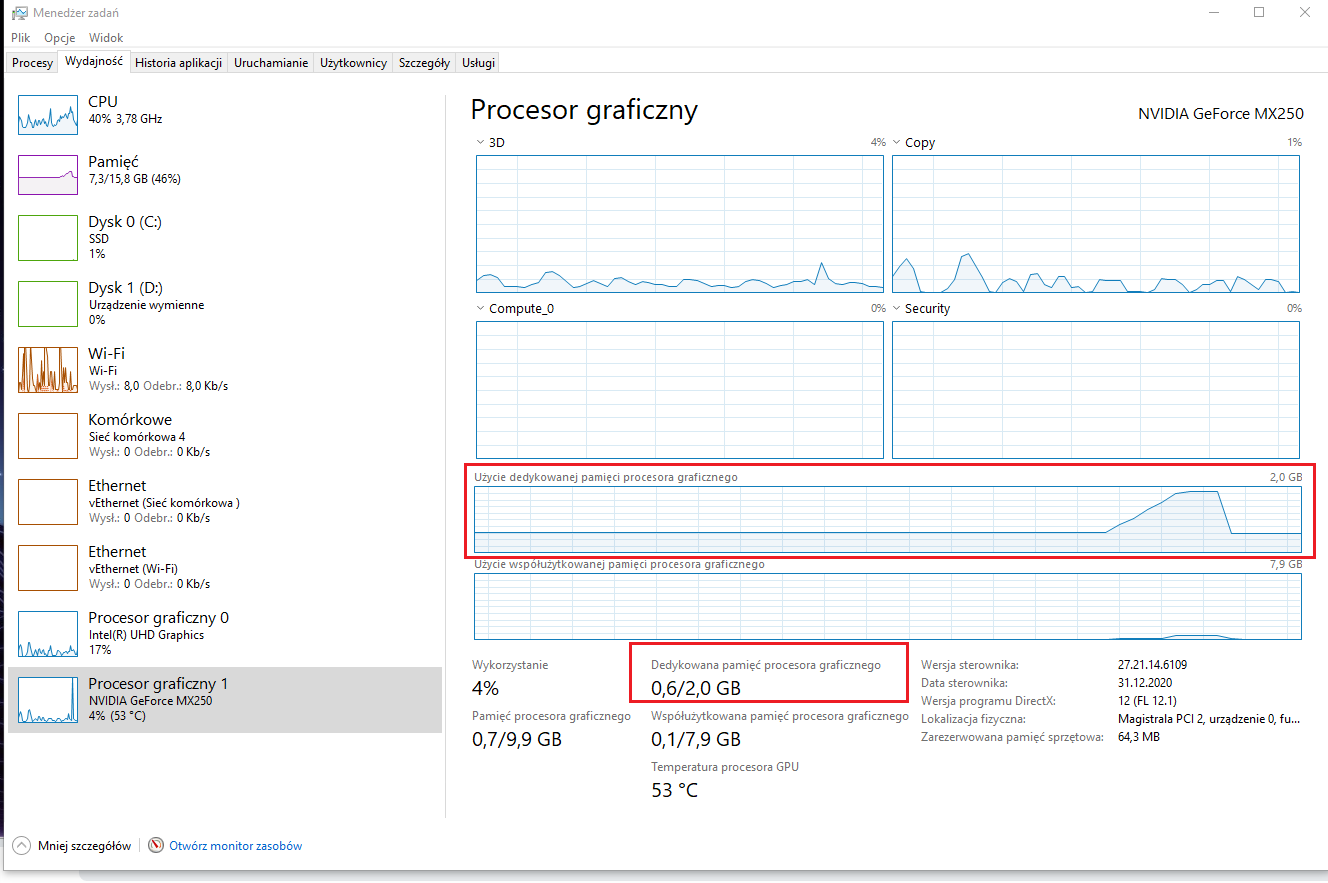

Hello everyone. I am trying to make CUDA work on open AI whisper release. My current setup works just fine with CPU and I use medium.en model I have installed CUDA-enabled Pytorch on Windows 10 computer however when I try speech-to-text decoding with CUDA enabled it fails due to ram error RuntimeError: CUDA out of memory. Tried to allocate 70.00 MiB (GPU 0; 4.00 GiB total capacity; 2.87 GiB already allocated; 0 bytes free; 2.88 GiB reserved in total by PyTorch) If reserved memory is >> allo

CUDA out of memory, but it shows enough memory available in error

CUDA Out of Memory on RTX 3060 with TF/Pytorch - cuDNN - NVIDIA

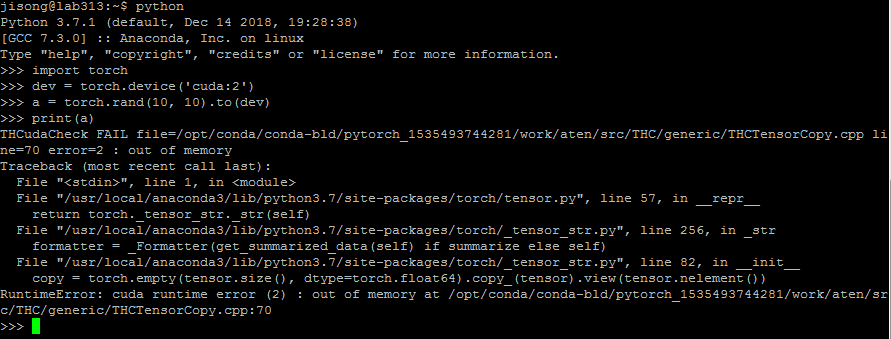

GPU is not utilized while occur RuntimeError: cuda runtime error

python - How to avoid RuntimeError: CUDA out of memory. during

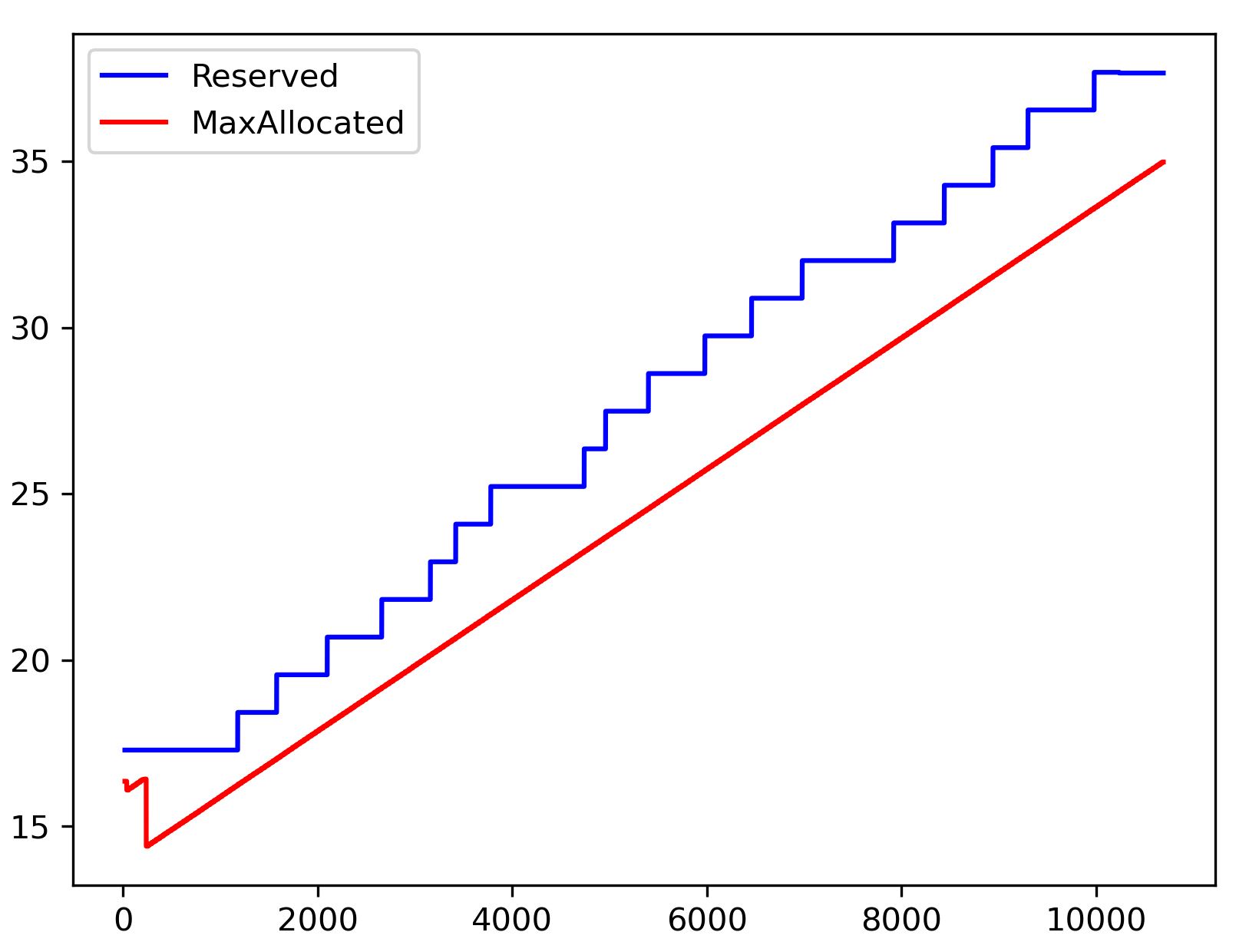

Memory Management using PYTORCH_CUDA_ALLOC_CONF - PyTorch Forums

Wav2vec fine-tuning with multiGPU - Models - Hugging Face Forums

My free memory is MUCH LARGER than allocating memory and I still

anon8231489123/gpt4-x-alpaca-13b-native-4bit-128g · out of memory

RuntimeError: CUDA error: CUBLAS_STATUS_ALLOC_FAILED when calling

A guide to recovering from CUDA Out of Memory and other exceptions

python - How to solve RuntimeError: CUDA out of memory.? Is

RuntimeError: CUDA out of memory. Tried to allocate - Can I solve