Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Por um escritor misterioso

Descrição

How can we optimize CPU for deep learning models' performance? This post discusses model efficiency and the gap between GPU and CPU inference. Read on.

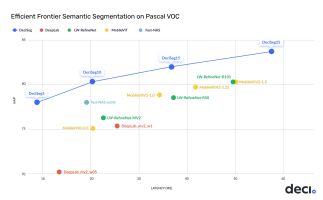

Deci Advanced semantic segmentation models deliver 2x lower latency, 3-7% higher accuracy

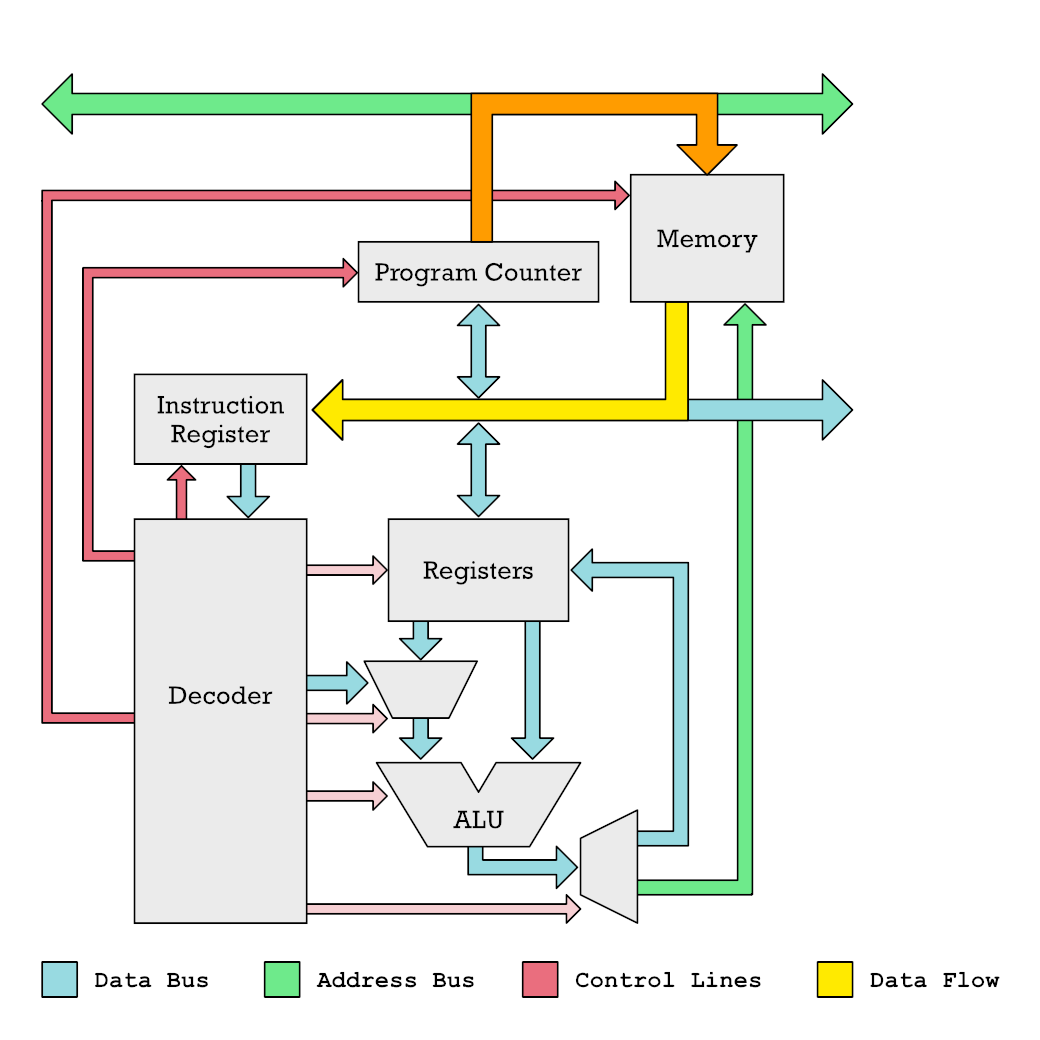

A high-level view of an integrated CPU/GPU architecture.

Measuring Neural Network Performance: Latency and Throughput on GPU, by YOUNESS-ELBRAG

Vector Processing on CPUs and GPUs Compared, by Erik Engheim

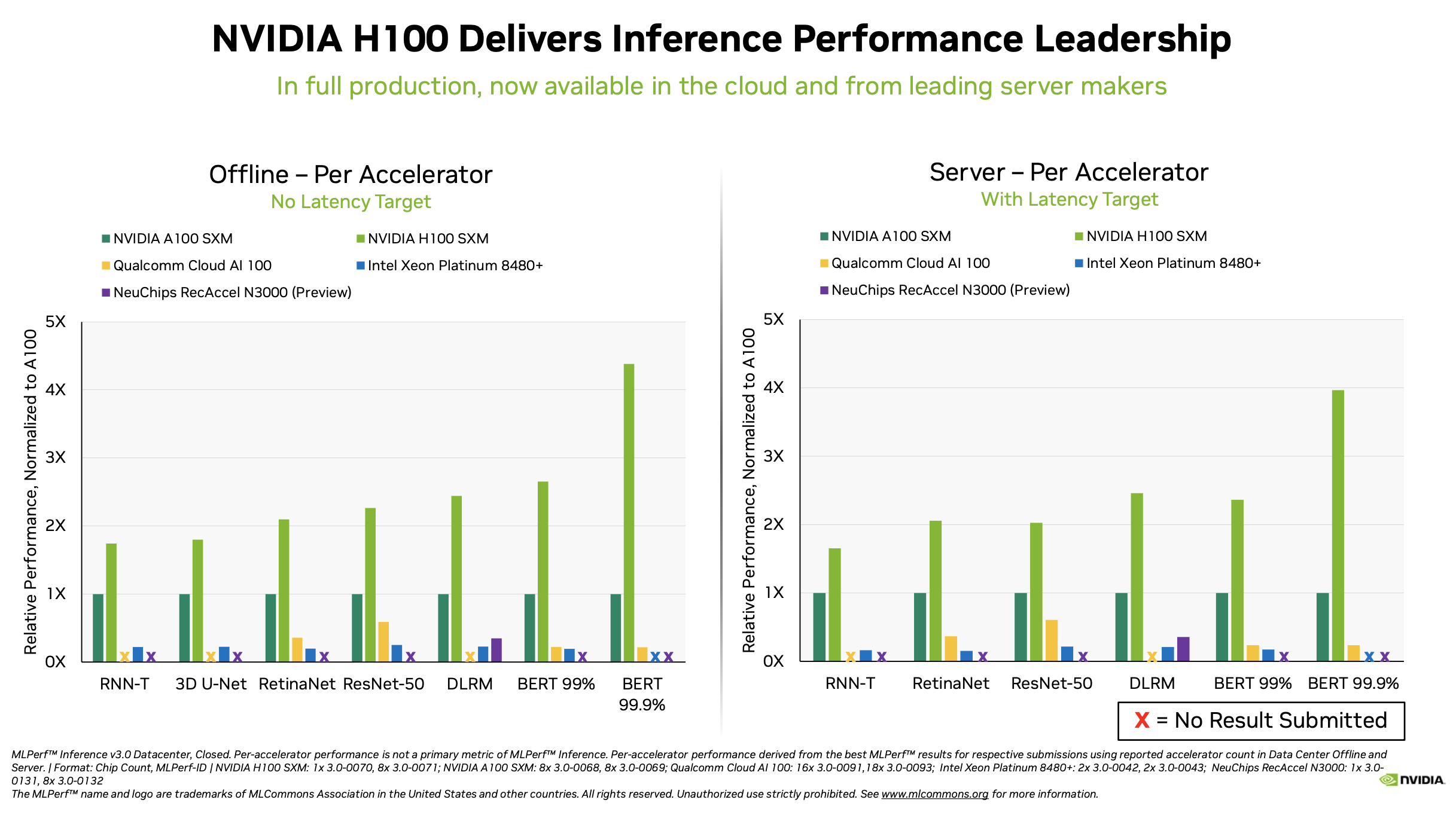

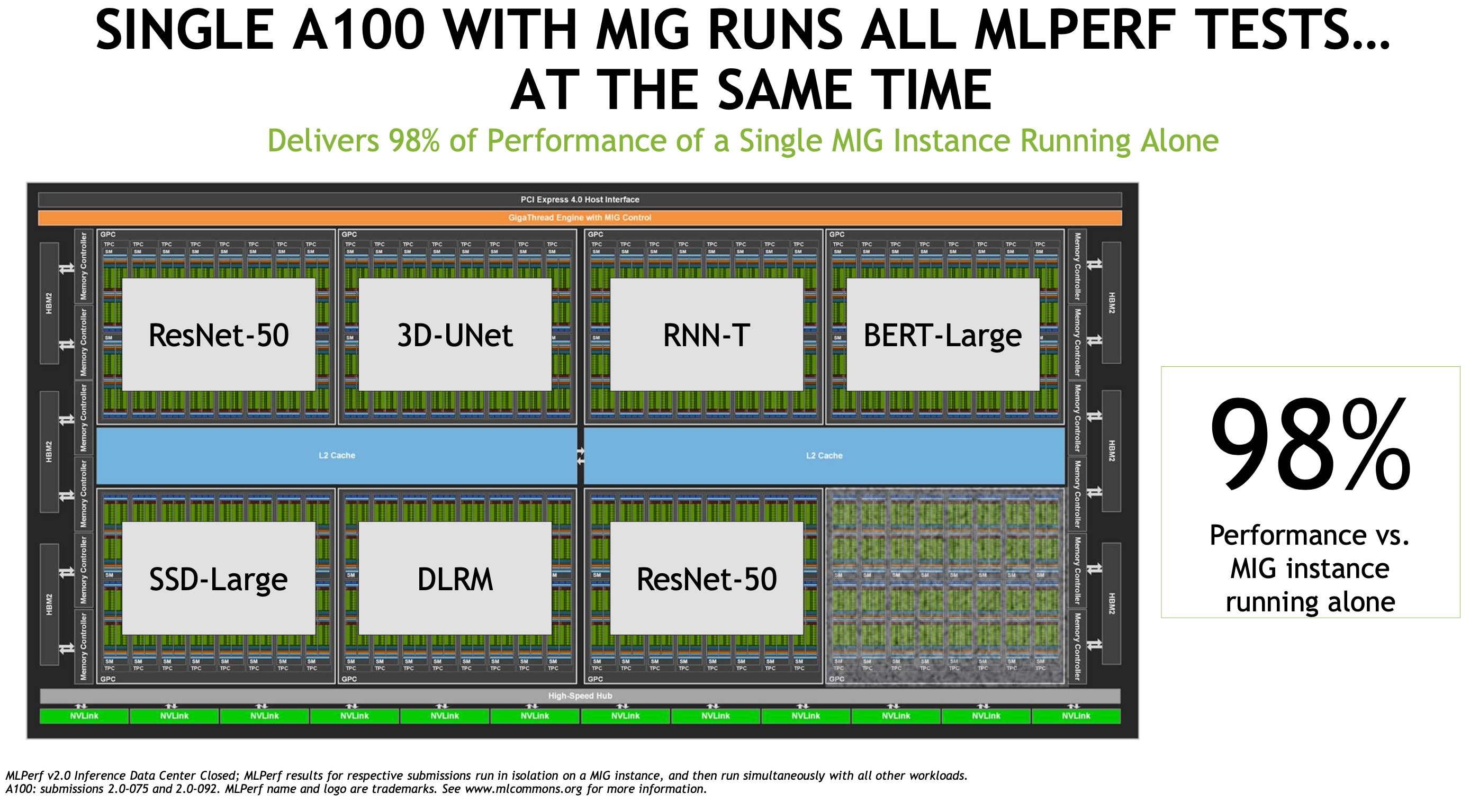

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

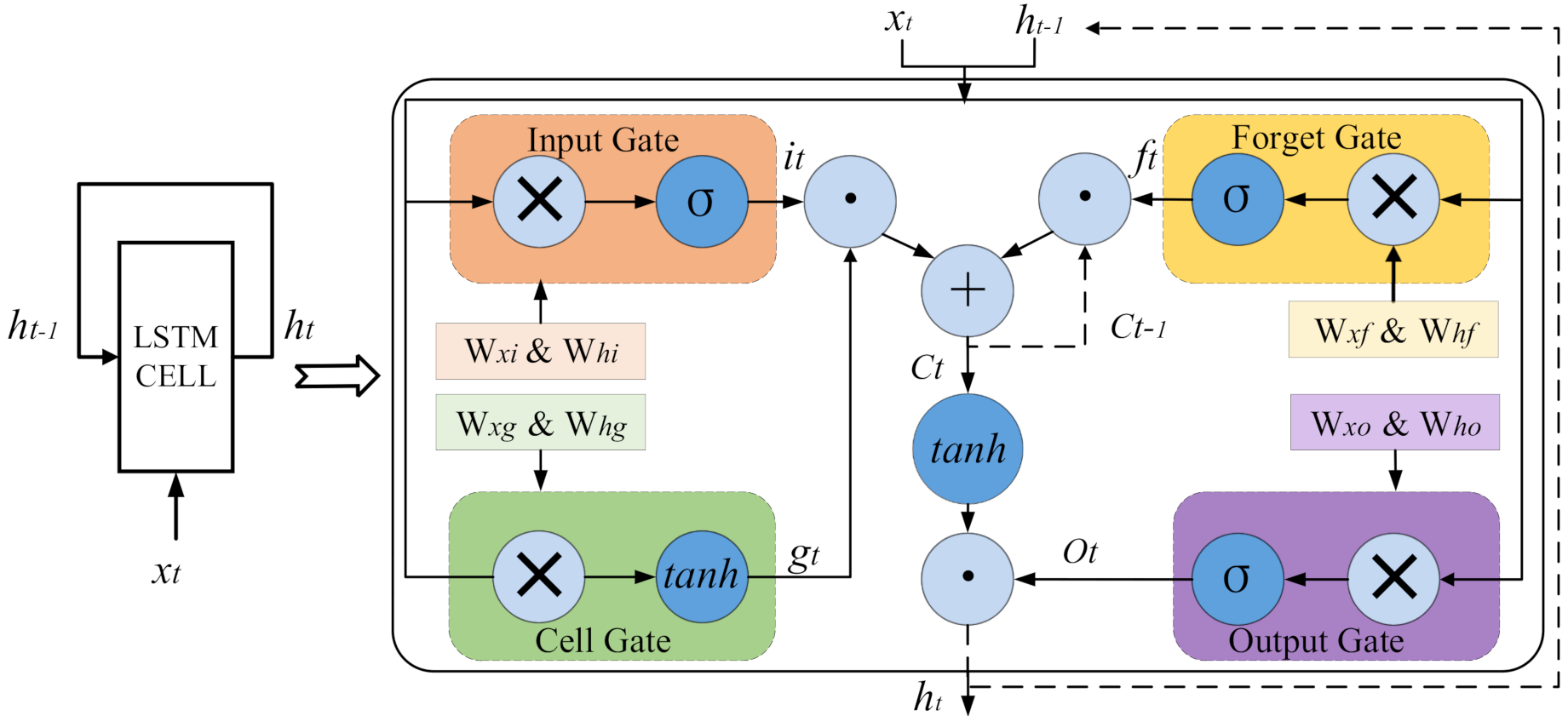

The Deep Learning Inference Acceleration Blog Series — Part 1: Introduction, by Amnon Geifman

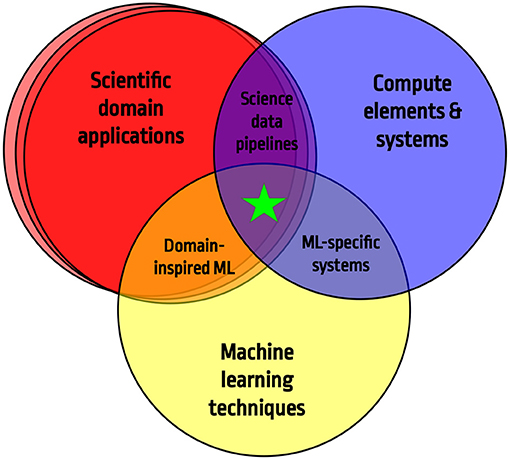

Frontiers Applications and Techniques for Fast Machine Learning in Science

The Definitive Guide to Deep Learning with GPUs

The Definitive Guide to Deep Learning with GPUs

How GPUs Accelerate Deep Learning

Performance Analysis and CPU vs GPU Comparison for Deep Learning

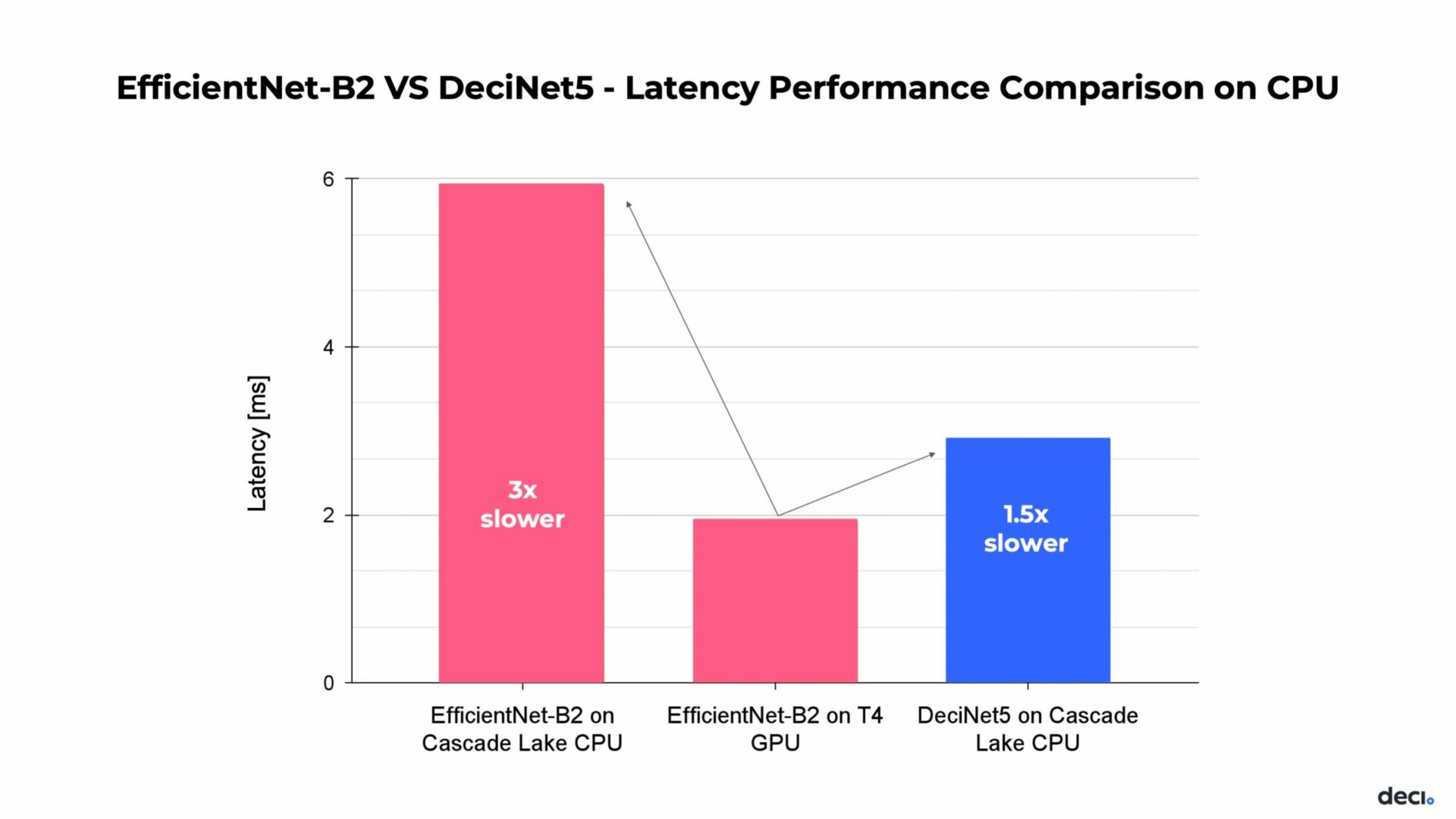

Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Nvidia Dominates MLPerf Inference, Qualcomm also Shines, Where's Everybody Else?

Deci Points to CPUs for AI

Electronics, Free Full-Text

format(webp))